The Evolution of Technology: From Computers to AI and the Unchanging Quest for Perfection

In the days before computers, creating a resume was a laborious task that required precision and patience. Using typewriters, each keystroke was permanent, and any mistake meant starting over or using correction fluid. The advent of computers promised a revolution, touting saved time and effortless editing. However, what transpired was not a reduction in effort but a shift in focus. Instead of merely producing a document, we invested time in perfecting it, and raising the standards of what a resume could and should be. Today, we stand at the cusp of another technological revolution with artificial intelligence (AI). Similar to the rise of computers, AI promises efficiency and ease. But will it truly save us time, or will we find ourselves once again raising the bar?

The rise of AI, much like the rise of computers, will not necessarily lead to less time spent on tasks but to a perpetual enhancement of quality and expectations.

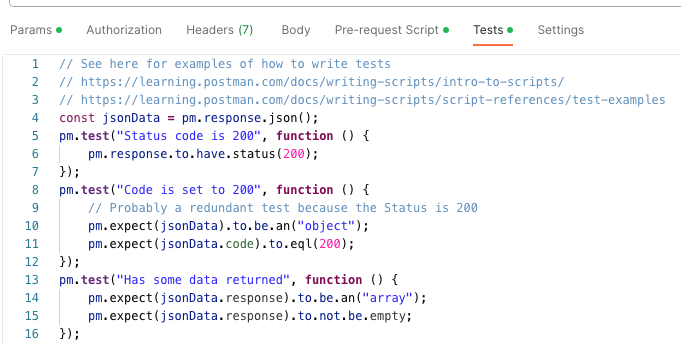

Disclaimer: I used ChatGPT to assist with the set up of this article. prompt: Back in the days before computers when we created our resume, we did it on typwriters. Then computers came along. We were told how much time we would save because the computer could save the information, and we would not need to spend time correcting the typewriter version. What ended up happening was that the standard was raised. We still spent the same amount of time one the resume, but the time went into making it pretty. In my article, I would like to compare the rise of AI with the Rise of the computer. We will not be saving time because instead of getting time we will be investing into raising the standard of our submissions. I am not sure how to create the comparison. Can you please come up with something to help me get going Photo by Ikowh Babayev: https://www.pexels.com/photo/man-wearing-red-crew-neck-sweater-16622/

The Era of Typewriters

Creating a resume on a typewriter was an exercise in meticulousness. Each letter had to be struck with care, knowing that any mistake could mean starting from scratch. Corrections were cumbersome, often involving white-out tape or correction fluid, which left unsightly marks. Formatting was a challenge, requiring manual alignment and spacing. The time investment was significant, but the result was a testament to one’s diligence and attention to detail.

The Advent of Computers

With the introduction of computers and word processors, the landscape of resume creation changed dramatically. The ability to save documents, make instant corrections, and experiment with formatting without fear of permanent error was a game-changer. Initially, it seemed that we would save countless hours. However, this newfound flexibility led to higher expectations. Resumes were no longer just about content; they became visual representations of professionalism. Time saved on corrections was now spent on choosing fonts, layouts, and even incorporating graphics. The standard had been raised, and the time invested remained substantial.

The Rise of AI

Artificial intelligence is now permeating various aspects of our lives, from smart assistants to advanced data analysis. In the realm of content creation and design, AI tools promise unprecedented efficiency and creativity. Platforms powered by AI can draft text, suggest improvements, design layouts, and optimize content for specific audiences. The allure is clear: faster production with enhanced quality. But, just as with computers, these advancements come with heightened expectations. An AI-generated resume isn’t just about listing qualifications; it’s about presenting them in a way that is both visually appealing and algorithmically optimized.

Comparison of AI and Computers

The promises made by AI today echo those made by computers decades ago. Both technologies are hailed for their potential to save time and increase productivity. However, in both cases, the reality is that the time saved on basic tasks is redirected towards meeting higher standards. For instance, while AI can draft a resume quickly, the focus shifts to refining and personalizing the output to stand out in an increasingly competitive job market. The cycle of technological advancement continues, with each innovation setting a new benchmark for excellence.

The Reality of Time Investment

Despite the promises of time-saving technology, the reality often involves reallocating that time towards enhancing quality. Consider the example of a graphic designer who now uses AI tools. While the initial design process may be faster, the designer now spends additional time fine-tuning the AI-generated elements to align with their vision. Similarly, job seekers use AI to draft resumes but invest time in customizing and perfecting the content to meet the higher standards set by employers.

Conclusion

In summary, the rise of AI mirrors the rise of computers in many ways. Both technologies promised to save time, yet both resulted in raised standards and continued time investment. As we embrace AI and its capabilities, it’s essential to recognize that the pursuit of excellence often means investing time in new ways. While technology evolves, the drive to meet and exceed expectations remains constant. In the end, it’s not just about saving time; it’s about using it wisely to create something better.